Botmunch

Care and Feeding of Googlebots

This is for you if:

- You want more visits from organic search

- You run a large ecommerce store with thousands of products

- You have a sneaking suspicion your site may actually be a big mess in the background

We will:

- Analyse a few million rows of your log files in extreme detail

- Report on the URL patterns causing breakage, duplicates or unnecessary redirection

- Help you fix them

- Monitor on a regular basis to catch any more problems as they happen

Call us on +44 (0)330 223 1164

Email us at info@taxonomics.co.uk

The problem

Google is one busy search engine. Do you know how many websites there are out there? And Google has to try and index all of them so it can offer them up in its search results. Unfortunately, Google just doesn't have the capacity to index every single page on your website, especially if you run a large ecommerce store. So it has to make choices.

Google is one busy search engine. Do you know how many websites there are out there? And Google has to try and index all of them so it can offer them up in its search results. Unfortunately, Google just doesn't have the capacity to index every single page on your website, especially if you run a large ecommerce store. So it has to make choices.

Indexing large ecommerce sites can prove particularly problematic, because huge catalogues that are frequently updated means huge numbers of potential URLs—all of which is very confusing to the Googlebots that crawl your site, indexing its pages. And any large change to the site’s URLs is going to leave a trail of potentially confusing redirects behind it.

This means Google could be indexing the wrong pages—pages that are broken, redirected, or otherwise useless— and presenting those pages to users, instead of the pages you actually want people to find. You know, like the pages that sell your products.

This means Google could be indexing the wrong pages—pages that are broken, redirected, or otherwise useless— and presenting those pages to users, instead of the pages you actually want people to find. You know, like the pages that sell your products.

And you know what, fixing up this sort of mess makes Google and you very happy; Google because it is getting really good value from its visits, and you, because a happy Google means more search visits. See our Hotels.com case study for how happy they were (spoiler: 12.5% happier).

How we can help

We figure out which pages Google is wasting its time on, and then we help you get it back on the right tracks. Below are the things Google wastes its time on, when it could be indexing relevant, useful pages of your website instead:

We figure out which pages Google is wasting its time on, and then we help you get it back on the right tracks. Below are the things Google wastes its time on, when it could be indexing relevant, useful pages of your website instead:

Broken Pages

Say you're a giant online fashion retailer. (Let's call you Sosa.) That swish party frock you sell—the hugely popular one that all the girls are fawning over on their Pinterest accounts—has had its page updated, because it's now available in green instead of blue. Marvellous! Unfortunately, that also means a new URL, and all the girls who click the broken Pinterest link are left feeling forlorn—and you've just lost a sale. And Google's only gone and indexed the old page but not the new one, hasn't it?

Duplication

You know what's a huge waste of time for Google? Indexing the same page over and over again, because it's served up at multiple URLs. This happens when, for example, www-dots are included and excluded (www.taxonomics.co.uk and taxonomics.co.uk are the same page—but Google doesn't see it that way), or when sometimes there's a slash at the end and sometimes there isn't (taxonomics.co.uk/ and taxonomics.co.uk—can you see where we're going with this?). There are countless other instances in which this can happen too, and all of them are bad. Trouble is, trying to fix this problem can make it even worse, because all of a sudden—as far as Google's concerned—Sosa has no page for dresses that are both little and black.

You know what's a huge waste of time for Google? Indexing the same page over and over again, because it's served up at multiple URLs. This happens when, for example, www-dots are included and excluded (www.taxonomics.co.uk and taxonomics.co.uk are the same page—but Google doesn't see it that way), or when sometimes there's a slash at the end and sometimes there isn't (taxonomics.co.uk/ and taxonomics.co.uk—can you see where we're going with this?). There are countless other instances in which this can happen too, and all of them are bad. Trouble is, trying to fix this problem can make it even worse, because all of a sudden—as far as Google's concerned—Sosa has no page for dresses that are both little and black.

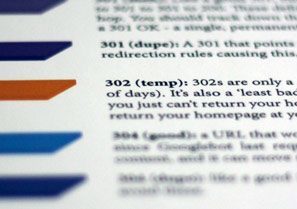

Temporary Redirects

Don't even get us started on temporary redirects. Just how much do you want to confuse Google, anyway? It won't know whether you're coming or going, so it'll index your site at both ends, which means more duplicate content.

Proliferation

Proliferation is, essentially, sending Google round the houses—or giving it billions of similar pages to crawl and index. This happens when you have, say, individual pages for kitten heels that are red, blue, green, size 4, size 5, size 6. Wouldn't it be better to tell Google about just one page of red kitten heels and then invite it in for a cuppa? Or at least send it on its way to indexing other useful pages. We’ve seen too many large sites that present billions of urls for Google to crawl to be surprised if it selects the wrong few hundred thousand.

How it works

We dive into your website's server logs to track the millions of requests search engine bots make for URLs on your domain. We then put our thinking hats on, organise the URLs into categories based on their construction, and analyse the responses—are the URLs broken? Redirected? Vanished into oblivion?

Getting hold of duplicated content is a little trickier, but our own bot makes short work of harvesting content from your site, and comparing it for duplicate content.

Once we've got this information right-side up and facing forward, we turn it into a beautiful map that shows the bots' crawl activity. This allows us to see your website's problem areas at a glance—and the wondrous opportunities for improvement behind each one. And we do like a good wondrous opportunity.

Once we've got this information right-side up and facing forward, we turn it into a beautiful map that shows the bots' crawl activity. This allows us to see your website's problem areas at a glance—and the wondrous opportunities for improvement behind each one. And we do like a good wondrous opportunity.

Oh, and your tech guys will be able to get a good handle on it too, thanks to those lovely maps we produce. They'll then be able to go in and stop Google requesting those crappy URLs we talked about—meaning it'll go ahead and index the good URLs instead. Which is nice.

We hate to brag (sort of), but we've found that some large ecommerce sites have enjoyed a 12.5% increase in search visits after being cleaned up using our Botmunch services. See our Hotels.com case study for more on this.

Get in touch

So—do you now have that niggling feeling that your ecommerce site isn't performing quite as well as it could be? Rightly so. It probably isn't.

Call us on +44 (0)330 223 1164

Email us at info@taxonomics.co.uk